Percepts: Knowledge of the map. A 10 cell circular field of vision

Actions: setDirection(int angle), moveDirection(int angle, int distance), moveForward(int distance), attackEnemy(int angle), movePowerUp(Node* n), moveFlag(Node* n)

Goals: Capture the opponent's flag and return it to own base; Prevent the opponent from capturing own flag by attacking opponents; Obtain power-ups for easier goal attainment

Environment: A 4096x4096 map discretized into a 128x128 grid of cells. Each cell is 32 units x 32 units.

Dynamic Agent “Personalities” - One of the main focuses for our project would be the ability for our agents to have dynamically shifting goals throughout the game. Depending on the current percepts, the agents will be able to establish the goal that is most relevant for the current situation. The AI group compiled together an assortment of these "dynamic personalities", including the following, with their respective goals:

A* Search Path Finding - A majority of our path finding was nothing more than simple, existing search algorithms. Our original implementation used a greedy search, which only considered the heuristic function to estimate its distance to the goal, rather than the actual path cost. In order to incorporate both factors, we implemented an A* search algorithm in all of our agents. The A* search algorithm is used in a majority of the movement on the map, including finding an opponent's flag, returning to a base, as well as finding power-ups throughout the map. Along with finding items on the map, the A* search is also used to avoid certains obstacles, throughout the map, including walls and bases.

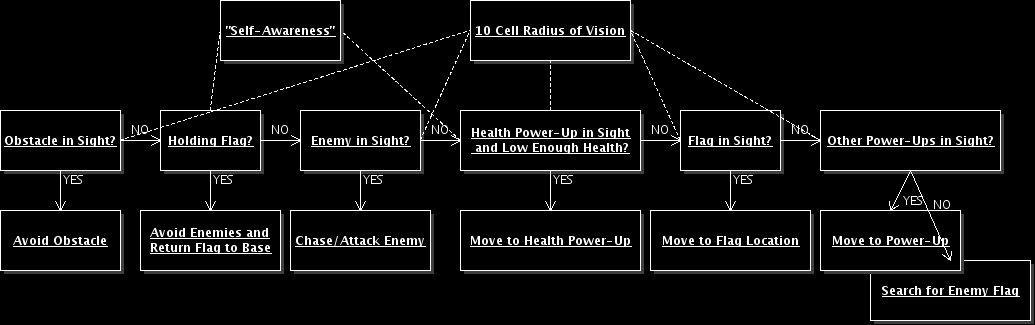

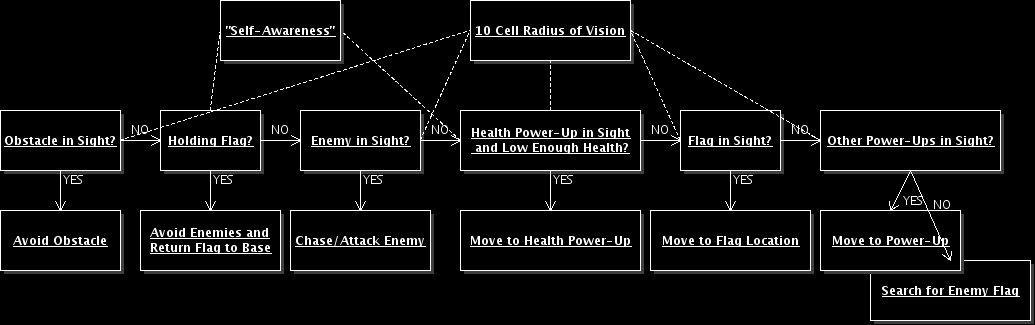

Block Diagram of a Smart Agent - The following block diagram represents the sequence of percept interpretation and actions of a smart agent:

Test Simulations

Project Successes - In the end, our agents were pretty hard to beat. With angry agents that are able to attack you whenever you are within their radius, defense players who will patrol the flag, waiting for any player to come near the base, and smart players that can get invisbility power-ups and walk right past you, the intelligence of the agents create a well-formed team. Their dynamic "personalities" were useful for the agents to adapt to the constantly changing percepts throughout the game and take the appropriate actions. Although there is no agent interaction, the agents acting on their own still out-perform, even a human player. Most of the time, us, developers were able to beat the AI, we think, which is mostly because of our knowledge and experience with the agents. Not only have we played this game several times to test it out, we implemented the "intelligence" of each agent. Players who have not played before are sure to be in for a challenge!

Steps for Improvement - In the beginning, we set many goals for ourselves, as we were very excited to design and implement such an excellent game. We wanted to put as many features in the game as we possibly could without overburdening ourselves. At the end of the quarter, we found that most of these features got implemented, however there were a few things that were left out:

Conclusion - In the end, we found that our agents were actually functioning much like human players. Although there was noticeable events when the agent would appear much more like an agent, for example, when the angry player would rather attack an opponent player, than return to its base, while holding a flag. However, for the most part, not only was our team quite impressed, we also received impressive on our final presentation/demo from our peers and professor. We believe that the dynamic peronalities became key for our project's success, and is quite useful in other situations with constantly changing percepts.

In conclusion, our AI was a success!!